type

status

date

slug

summary

tags

category

password

icon

Domain & Institution

Author

Priority

Abstract

Creation Date

Introduction

This blog post will explain the derivation of the Bellman Equation, a fundamental concept in dynamic programming used to solve optimization problems. The equation's significance lies in its ability to break down complex problems into more manageable subproblems, enabling the identification of global optimal solutions. The Bellman Equation is highly versatile, with applications across various domains including shortest path problems, resource allocation, control theory, and reinforcement learning.

Before delving into the Bellman Equation, let's first explore some key concepts.

Markov Process

Markov Property

The Markov Property characterizes a stochastic process where the future state depends solely on its present state, not on the sequence of preceding events. In essence, it implies that "given the present, the future is independent of the past."

is the set of random variables ; is the state sequence.

Markov Chain

A Markov Chain is a stochastic process that exhibits the Markov Property. This means the system's future state depends solely on its current state, not on the sequence of previous states.

Assume we have a random state sequence , the next time state depends only on current state . We can assume the historical state is a set , includes any previous states, and then, markov process satisfies the following condition:

This indicates that the future state is independent of the history given the current state , adhering to the Markov property.

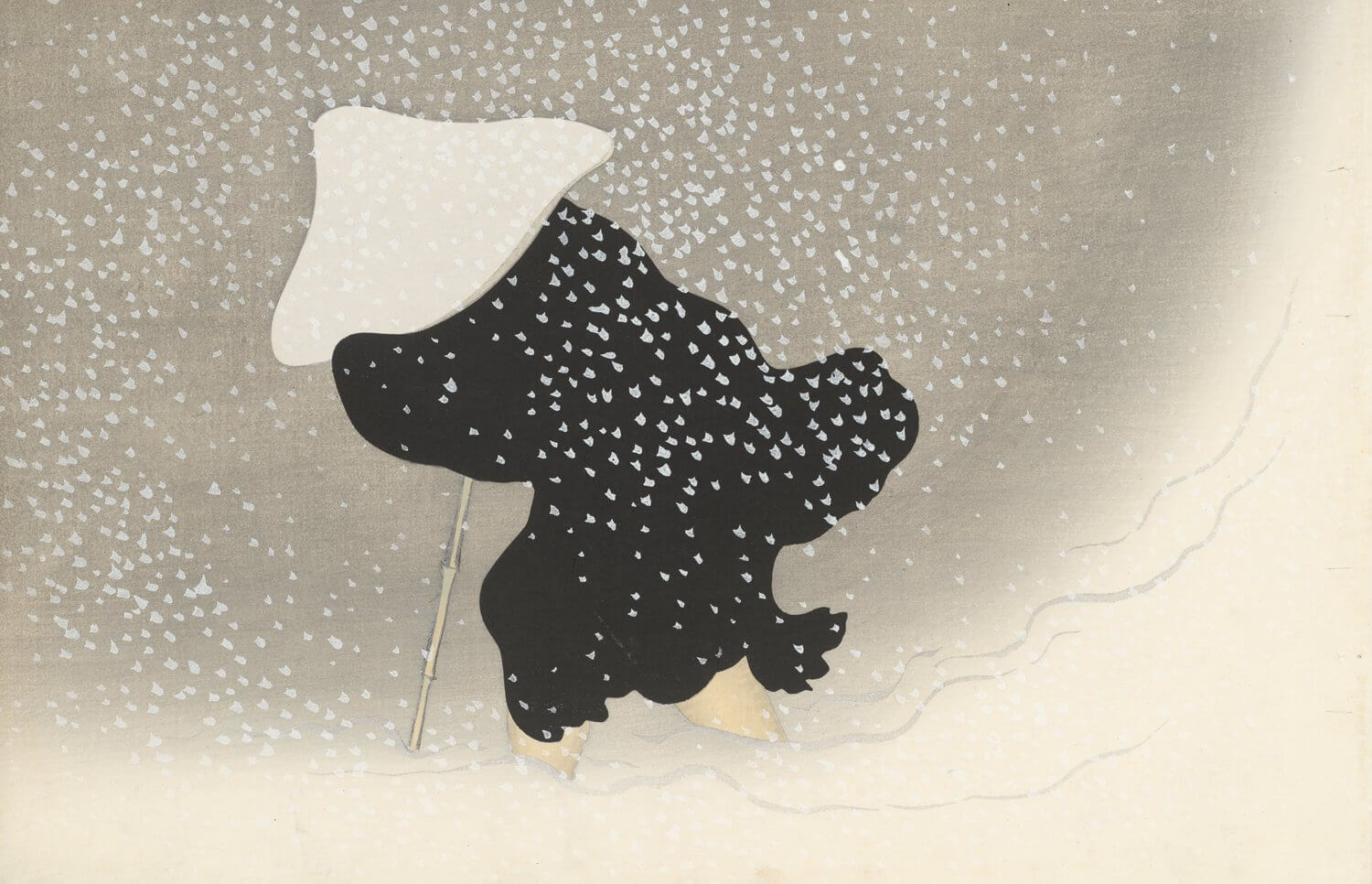

Here's an example of a Markov Process:

Let me explain this picture. There are four states transition between each other. Starting with ,it has the probability of stays at the current state, and has probability to transfer to the state . It also has probability that transfers to state .If is the current state, it has probability to transfer to , and probability to and has stay at the current state.

<ins/>

Markov Reward Process(MRP)

A Markov Reward Process extends the Markov Chain concept by incorporating rewards. It models scenarios where, alongside state transitions, rewards are associated with each state or transition. Let's define some key concepts to better understand this process.

The return,, refers to the total cumulative reward that an agent receives, starting from a particular state, over time. It is the sum of all rewards an agent accumulates as it transitions through different states, often taking into account a discount factor to give more weight to immediate rewards and less weight to future rewards. The Math expression is as follows:

The state-value function, often denoted as is a key concept in reinforcement learning and Markov processes. It represents the expected return(total future reward) starting from a specific state and following a particular policy or set of transition rules. The state-value function helps quantify how good it is to be in a particular state, considering the long-term rewards that can be obtained from that state onward. The formula is as follows:

The discount factor is a crucial component especially when calculating the total return(sum of future rewards). In many decision-making problems, immediate rewards are often preferred over future rewards. The discount factor reflects this preference. It can also helps handle infinite time horizons because in many cases, the process continues indefinitely or for an extended period, making it hard to sum an infinite series of rewards. The discount factor causes future rewards to diminish exponentially, ensuring the total sum remains finite, even for long or infinite horizons.

So, now, we can continue to derive the equation aboved.

So, you may have a question that how to get from step(4) to step(5) ? Here is the process:

Finally, we get the Bellman Function. It is:

Let's illustrate the Bellman equation with an example:

Imagine you've scored 100 points on a challenging math exam, earning a reward of 10 points. You now face two possible transitions:

- Play a video game to reward yourself (probability 0.8), which will earn you 8 points.

- Continue studying to improve yourself (probability 0.4), which will earn you 5 points.

This scenario demonstrates how the Bellman equation calculates the value of a state by considering immediate rewards and potential future states.

Assume the discount factor is 0.5 and the state of get 100 point score in a hard math exam is defined as , so the reward is defined as . The state of playing a video game is defined as , so the reward is defined as . Since there is no additional state after playing a video game, the return is 8. The state of continuing to learn to improve yourself is defined as , and the reward is and the return is also 5.

Since and have no additional state, the value functions of and are as follows:

Now, according to Bellman equation, we can get:

Conclusion

In this blog, I've outlined the process of deriving the Bellman Equation, a critical concept in reinforcement learning. This equation not only underpins many advanced algorithms but also provides valuable insights into understanding Markov Processes. By breaking down complex problems into manageable subproblems, the Bellman Equation offers a powerful tool for solving optimization challenges across various domains.

<ins/>

- Author:BubbleBrain

- URL:https://chengshengddeng.com/article/bellman-equation

- Copyright:All articles in this blog, except for special statements, adopt BY-NC-SA agreement. Please indicate the source!

Relate Posts